Britain Just Banned Deepfake Porn. Now Comes the Hard Part.

Britain just announced it will criminalize the creation and sharing of sexually explicit deepfakes.

Britain just announced it will criminalize the creation and sharing of sexually explicit deepfakes. The law, introduced as part of the government's Crime and Policing Bill, targets the growing problem of AI-generated pornographic images that can place anyone's likeness into explicit content without their consent. Offenders will face prosecution for both creating and sharing these images, with penalties including fines and potential jail time of up to two years.

The timing isn't arbitrary. According to Britain's Revenge Porn Helpline, deepfake abuse has increased 400% since 2017. These aren't just statistics - they represent real harm to real people, predominantly women and girls who face what campaigner Jess Davies accurately calls "a total loss of control over their digital footprint." When someone can take your likeness and insert it into sexually explicit content without your consent, they're not just violating your privacy - they're weaponizing your identity against you.

The government is absolutely right to act. The moral clarity here is refreshing: "There is no excuse for creating a sexually explicit deepfake of someone without their consent," says the justice ministry, and they're correct. This isn't one of those complex policy trade-offs where we need to balance competing goods. Non-consensual sexually explicit deepfakes are wrong, full stop.

But - and this is where it gets tricky - being morally right doesn't automatically make something enforceable.

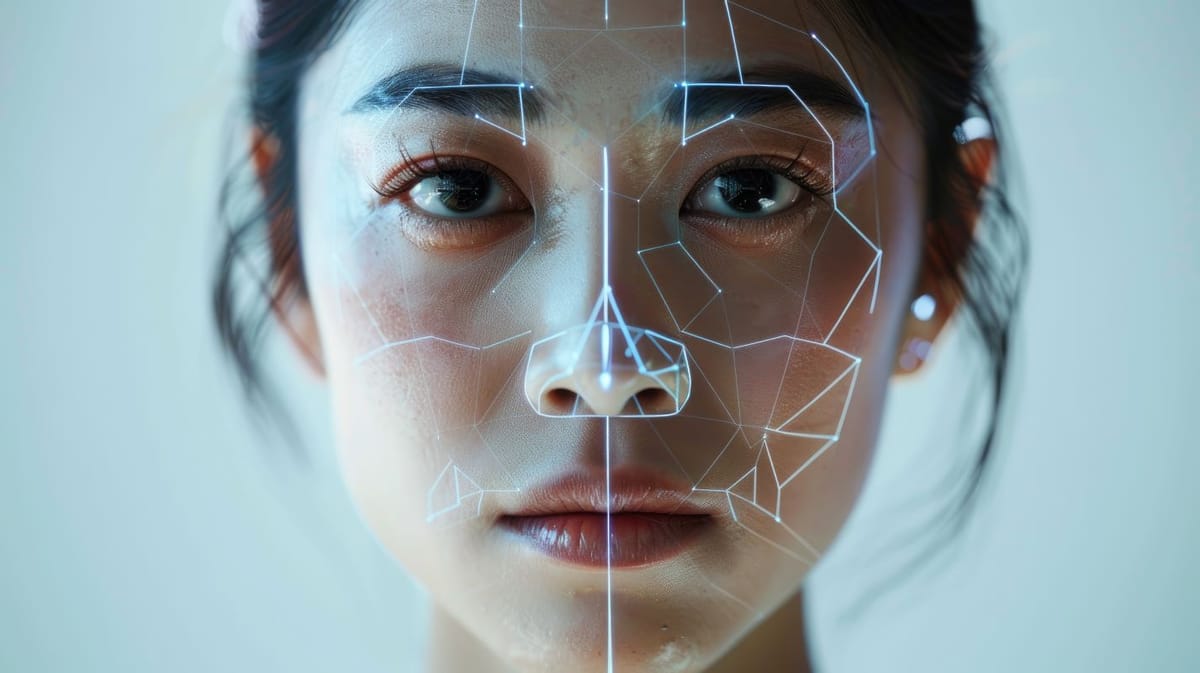

Think about the technical architecture of deepfake generation. Unlike traditional revenge porn, which requires actual intimate images and typically involves centralized distribution platforms, deepfakes can be created entirely from public photos using locally-run AI models. The technology is mathematics running on personal computers. This makes detection and enforcement extraordinarily difficult.

The jurisdiction problem compounds this challenge. Britain can pass whatever laws it wants, but deepfake generation tools are globally distributed. The code is open source. The models are downloadable. Unless every major nation implements and enforces similar legislation (good luck with that), determined bad actors can simply operate from wherever enforcement is weakest.

Then there's the acceleration of technological capabilities. Today's deepfake detection tools might work reasonably well against today's generation techniques. But this creates an arms race - as detection improves, generation will evolve to become more sophisticated and harder to trace. The law assumes a relatively static technological landscape, but we're dealing with a rapidly moving target.

None of these challenges make the law worthless. Even if enforcement is imperfect, having clear legal frameworks that criminalize this behavior serves several valuable purposes. It provides clear moral signaling about societal values. It gives platforms legal cover for aggressive content moderation. It enables prosecution of the most egregious and traceable cases. And perhaps most importantly, it creates incentives for platforms to develop better detection tools.

This is actually a pattern we've seen before with revenge porn laws. Perfect enforcement wasn't possible there either, but the laws still reduced harm by creating better incentive structures and clearer enforcement mechanisms.

The key difference is that deepfakes represent a significantly harder technical challenge. With traditional revenge porn, the content has a clear provenance - real intimate images taken at a specific time and place. Deepfakes can be generated from any photos, using techniques that become more sophisticated by the month.

We need a two-track approach: robust laws to handle the cases we can enforce, combined with aggressive investment in technical solutions for detection and prevention. Britain's law is a good start on the first track. But without corresponding progress on technical countermeasures, we're basically trying to enforce speed limits without radar guns.

This represents a new category of regulatory challenge. We're not just trying to stop bad behavior - we're trying to regulate the output of mathematical functions running on private computers. The law is necessary but not sufficient. The real work lies in developing technical and social frameworks that can meaningfully address this new category of harm.

So yes, Britain is right to pass this law. The alternative - doing nothing while this technology proliferates - is morally unconscionable. But we should be clear-eyed about the implementation challenges. This isn't just about writing the right laws - it's about developing new enforcement paradigms for a world where anyone with a laptop can manipulate reality.